Training your own Wan 2.2 lora is pretty much similar to train Wan 2.1 lora but the only difference between them is that the updated variant has two transformer model (high noise and low noise). We have already seen how fascinating is to train your own stylized lora model for images but for videos its mind boggling.

This means you need to train them separately and yupp due to its huge architecture this will require quite powerful machine setup. To train our lora, we will be using AI-Toolkit.

This is the ultimate tutorial of wan 2.2 that we have explained for Text to Image or Text 2 Video/Image to Video. All the settings will be same there are only few difference that you need to take care of.

Table of Contents:

Requirements

1. Linux/Windows OS based machine. Mac OS/Low end PCs not supported. Its better to use third party cloud services like Runpod etc that takes almost $2/hr to run on decent level GPUs.

2. At least NVIDIA RTX 4090/5090 with at least 24 GB VRAM (for Images). For videos it should be much higher.

3. Git installed

4. NodeJs installed

5. Python greater than 3.10 installed

Install AI Toolkit

1. People already installed AI toolkit need to update it. To do this, move inside the root folder and use the command git pull to update it. New user need to install Ai toolkit UI. Open terminal and use following commands.

(a) For Windows:

Open terminal, use the AI-Toolkit Automatic installation bat setup file from github repository. This handles auto-updates and download all the required files (python, cuda, git, NodeJs etc) automatically. Just download AI-Toolkit-Easy-Install.bat file and click to start installation.

(b) For linux system:

-Clone AI toolkit repo:

git clone https://github.com/ostris/ai-toolkit.git

-Move into folder:

cd ai-toolkit

-Create virtual environment:

python3 -m venv venv

-Activate virtual env:

source venv/bin/activate

-Install torch:

pip3

install --no-cache-dir torch==2.7.0 torchvision==0.22.0

torchaudio==2.7.0 --index-url https://download.pytorch.org/whl/cu126

pip3 install -r requirements.txt

-Install requirements dependencies:

pip3 install -r requirements.txt

2. After installation, move inside folder and execute the command:

cd ui

npm run build_and_start

3. Now, Open Ai-toolkit UI using following address inside your browser:

http://localhost:8675

Prepare, Configure Dataset and settings

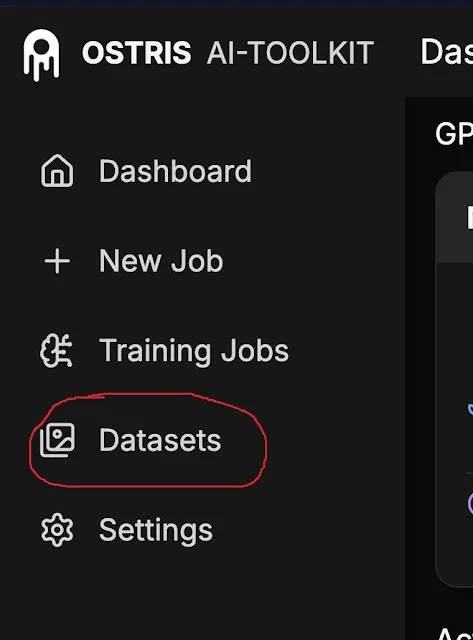

1. Open the AI Toolkit Dashboard. Now, click on Datasets options available on left tab. Again click on New Dataset on top right corner. Put your dataset name and finally click Ok to confirm.

2. Next, add 10-15 images(png or jpg) or videos(mp4) from locally by clicking on top right corner. But videos takes high VRAMs. As usual, use the same file format type with diversification while preparing dataset.

If you are training special type of video Lora (ex- camera panning, zooming etc) you need to use videos (min 5 seconds, more gets handled automatically) as sample for best results otherwise images could work for normal video loras.

For image/video samples you can try royalty free contents from websites like Unsplash, Pixels etc.For example- here, we want to train specifically for Tiktok Female influencer. So, we uploaded 10-15 images of same girl with different pose.

3. Add captioning for each image/video uploaded into it.

4. Now, head over to New job section, and create new job by adding relevant name-

JOB:

For example- We used name as "Wan2.2_I2V_14b_Tiktok_girl". Now follow and add the parameters for given options:

Trigger word- Add the trigger word if you want or just leave this if added captions.

Model (Model Architecture): Wan 2.2 I2V 14b

We selected Wan 2.2 I2V 14b model architecture because we want to train I2V lora. If you want to train for Text to Videos lora use Wan 2.2 T2V 14b etc.

MULTISTAGE:

Stages To Train-For High and Low noise. You can choose them separately or select both at once if you want to train them. Means it will alternate between some steps you chosen and will generate two different Loras (High & Low) models.

Switch Every- Use this switching option with steps for both models training. Lower switching value will consume more time specially if having low VRAMs. Use 10-15 for low Vrams.

Options (Low VRAM): Enable Low Vram if having lower consumer grade VRAMs (16-24 GBs). This options uses the VRAM block swapping mechanism for load/unloading High & Low noise models.

QUANTIZATION:

Transformer: Select your model Float type. Do not know what is this, just follow our Quantization tutorial for more detailed understanding.

Text Enocder-FP8(default)

TARGET:

Target (Target Type): Lora,

Linear Rank- 16-32

TRAINING:

Batch Size-1

Gradient Acculmulation-1

Steps-3000 to 5000 (good), better if more but consumes longer training time.

Learning rate- 0.0002 for fast learning pace (default-0.0001)

Time Step Type-Linear (default-for video style), Sigmoid (for person/character), Shift(focused on first frame), Weighted(for timestep bias mentioned below)

Time Step Bias- Balanced(default), High Noise, Low Noise. This bias is for focusing on specific model types.

Cache Text Embedding- Enable if using Low Vrams. This loads and unloads the text encoders. If enabled you should not use the trigger word otherwise you will get an error.

DATASETS:

Num Frames: Use value as 1 if using image data set.

Use 81 if using videos dataset sample (as Wan 2.2 I2V 14b officially uses 81 frames). You just need to follow the formula of multiple of 4 (+1), like- 9(8+1), 41(40+1), 81(80+1).

Here, we are training a character lora so we will be using images as dataset and set the value to 1.

Flipping: This option must be set to disabled. It is to increase the image data as while training this will flip your images horizontally & vertically. Useful when working with lora for something like 3d animating kaleidoscope videos where from every perspective it looks same.

Resolution- Use 256 and 512 for Low Vrams. For High VRAM use 512,768 and 1024 pixels.

SAMPLE:

Use 81 if using videos dataset sample (as Wan 2.2 I2V 14b officially uses 81 frames). You just need to follow the formula of multiple of 4 (+1), like- 9(8+1), 41(40+1), 81(80+1).

FPS-1

Sample Prompts(n): Add prompts for your image (use 4-6) samples. These are the control dataset that are used to test your lora model how the model is performing when use images as input. Also use the trigger word if required. Next, add the respective control images by click on Add Control Images over the right.

5. Finally, hit Create Job button on the right top corner. Then, click on Play button to start the training.

Start your Training Process

1. After creating the job click on play button to start training. Your created job training has been added. Now, you can start/stop your training anytime in between and it will start from wherever you left.

2. On the left, you will get a processing cmd status running for created job training with every steps, iterations, timings etc. Whenever you start the training for the first time it will download the models in the background.

3. On the right, your GPU health status(Temperature, Fan Speed, clock speed, GPU Load, Memory etc) gets shown.

4. Check periodically on every 100 steps on Samples option to get your lora model status. This will give you clear picture what results your trained model will generate.

If you think its not that good just play with the settings(Num frames, etc) mentioned above and then restart your training.

Remember this is the Wan 2.2 variant (high+Low noise) not the Wan2.1, so this will consume more time, almost 2-3 days with powerful consumer grade setup. We used GPU based cloud service to train our video lora (I2V) on NIVDIA A6000 with 96 GB VRAM that took almost 24 hrs.

5. After, the training your Wan 2.2 Lora models high noise and low noise file (.safetensors) will be found inside ai-tookit/output folder. You can push your trained lora to your Hugging Face repository using remote/local. If you do not know how to do it just follow our Cloning models to Hugging face repo tutorial.

Running your Lora model using ComfyUI

1. Load the basic Wan 2.2 I2V 14b/Wan2.2 T2V 14b etc workflow. If you do not know how to work with the workflow just follow our Wan 2.2 workflows tutorial. To download the basic Wan2.2 workflows, access our Hugging Face repository.

2. Place your high and low noise lora model into ComfyUI/models/loras folder. Load other models (Wan 2.2 high-low noise i2v models, text encoders, vae etc) that you use as usual for Wan2.2 14b workflow.

3. To work with lora models, some changes you need to do in basic workflow. Add two new LoraLoaderModelOnly node by searching from the list of ComfyUI.

4. Load your lora high noise model into first LoraLoaderModelOnly node and low noise lora model into second LoraLoaderModelOnly node. Connect first LoraLoaderModelOnly with ModelSamplingSD3 node and first KSamplerAdvanced node. Then, Connect second LoraLoaderModelOnly node with ModelSamplingSD3 node and second KSamplerAdvanced node.

5. Load your image into Load image node to generate your video generation style. Use Basic Ksampler settings as for Wan 2.2 I2V 14b/Wan 2.2 T2V 14b model.

6. Add your relative prompts with trigger word. Hit run to start the generation.